AI Feedback Assistant: Instructor

Getting Started

I was a member of an artificial intelligence / machine learning (AI/ML) research and development team that had a number of design challenges with early-stage products that had Natural Language Processing (NLP) AI components. Utilizing my background in design, statistics, psychometrics, and psychology, I helped solve a number of design and data challenges related to designing a proof of concept of an AI assistant that helps college-level instructors grade and provide feedback on student writing.

The AI assistant was designed to collaboratively grade and provide feedback on student writing with college-level instructors. The project included a number of steps:

-

Generative research, including a survey, interviews, and cognitive walkthroughs with instructors at a large university

-

Design thinking sessions with stakeholders and initial proof of concept designs

-

Concept testing with instructors

-

Iterating designs

Client

Pearson & a Large University

Duration

6 months

Role on Project

AI research & design

Skills Demonstrated

Quant & qual research

Product strategy

Concept testing

Proof of concept design

User flows

Interaction design

The Team

Learning Design

Four learning researchers and designers

External University

A large university with approximately 4 core project team members from their innovation center.

AI Team

-

2 data scientists, M modeling

-

1 VP AI products & solutions (background: cognitive psychology & CS

-

(me!) data scientist, AI research & design

Instructor Survey

Survey development began by iterating on a list of writing skills and feedback practices and we developed items to assess each.

Survey Objectives

-

To help us understand what writing skills and feedback practices an automated feedback system should focus on

-

To determine the writing skills students struggle with the most

-

To identify feedback practices that are most effective in improving writing skills

Survey Objectives

Example Writing Skills Items

Rate the extent to which you think students tend to struggle with the context of and purpose for writing.

Never - Rarely - Occasionally - Often - Always

How effective are you in helping students improve the context of and purpose for writing?

Not at all effective - Slightly effective - Moderately effective - Effective - Very effective

How often do you provide feedback around the context of and purpose for writing?

Never - Rarely - Occasionally - Often - Always

Example Feedback Characteristics Items

Rate how important you believe praise highlighting something specific that the student has done well is for improving writing skills in general

Not at all important - Slightly important - Moderately important - Important - Very important

Indicate how often you provide praise highlighting something specific that the student has done well when grading writing

Never - Rarely - Occasionally - Often - Always

Writing Skills Insights

-

Instructors reported being NOT as effective at addressing student struggles with:

-

Sources and evidence

-

Control of syntax and mechanics

-

-

Provided feedback on sources and evidence most frequently

-

Students struggle the most with:

-

Content development

-

Sources and Evidence

-

Control of syntax and mechanics

-

Writing Skills Insights

Feedback Characteristics Insights

The most important feedback characteristics:

-

Motivational statements

-

Praise about what a student did well

-

Global feedback

Feedback Characteristics Insights

Sample

-

Goal: 500 responses

-

Sent survey to a random sample of 1,000 instructors (out of 5,000) (10%)

-

The estimated response rate was 50% (the survey was sent out by university administrators)

-

Received 535 responses

Sample of Descriptive Results

Sample of Exploratory Regression Results

How often instructors give a certain feedback characteristic (e.g., praise, localized feedback) predicting how effective they are at improving a certain writing skill (e.g., content development).

predicting

Instructor Interviews & Cognitive Walkthroughs

Seven college-level instructors participated in a 45 minute interview and 45 minute cognitive walkthrough of what they typically do while grading and providing feedback on student writing.

Research Questions

-

Why do instructors assign writing (i.e., what is the purpose of their writing assignments?)

-

How do instructors prepare learners for feedback?

-

Understand the current state of grading and providing feedback on writing

-

How and when do instructors give feedback?

-

How much do they focus on citations and format?

-

How does the feedback given relate to the purpose of the writing assignment?

-

What writing skills do students struggle with the most and the least?

-

What attributes of the writing influence scoring and feedback decisions?

-

In general, how do instructors approach grading and giving feedback?

-

What, if anything, do instructors perceive as being the most valuable feedback to help students improve from one assignment to the next and why?

-

How do instructors perceive the relationship between feedback and grading rubrics?

-

What would an ideal grading experience be?

Interview & Walkthrough Objectives

-

To determine instructor wants, needs, pain points, frustrations, behaviors, and overall experience surrounding grading and providing feedback on student writing.

Interview & Walkthrough Objectives

Interview & Walkthrough

Key Insights

-

APA format and course content are important

-

Grading and providing feedback need to be more efficient

-

Students often don't read or incorporate feedback

Interview & Walkthrough

Key Insights

Results

Results of the interviews and cognitive walkthroughs were synthesized via a persona, user stories, and journey map.

View a comprehensive list of User stories

Generative Research

Ideating

Stakeholder Design Thinking Workshop

About 15 internal and external stakeholders took part in a design thinking workshop. Participants were shown research results and were given the following problem to solve: Grading and providing feedback on student writing is very time consuming, how can we automate feedback recommendations an instructor can use to help speed up the process and provide higher-quality formative writing feedback to students?

The workshop resulted in a number of different concepts. I mocked-up two concepts, including one of my own, to be tested with instructors as well as developed the starting information architecture of the system.

Initial Design & IA

Concept Testing &

Final Proof of Concept User Flow

Concept Testing

Three university instructors participating in a 2-hour concept test exploring the idea of an AI assistant automatically scoring writing and recommending feedback. The instructors were shown design mock-ups and were asked to provide feedback on the general ideas and designs. Results from the concept testing were used to iterate on a final user flow for the project's proof of concept.

Concept Testing

Key Insights

-

Overall excited about the idea - anything that will help will spending less time grading is good.

-

Add information on how the AI works.

-

Have all feedback boxes open and limit the number of clicks needed to finalize a paper before sending it to the student.

-

Have an expandable rubric to view all categories at once.

-

Include overall rubric element feedback in the expandable rubric

Concept Testing

Key Insights

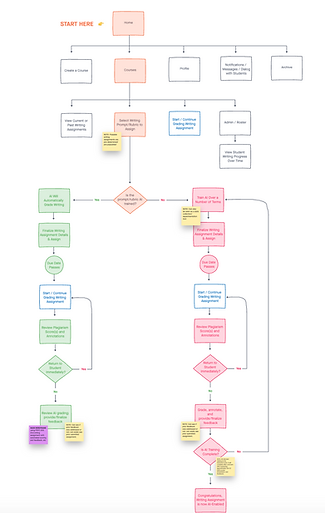

User Flow

Results from the concept testing were used to iterate on a user flow for the project's final proof of concept. Click any of the images to view the full user flow in Miro.

View prototype in InVision